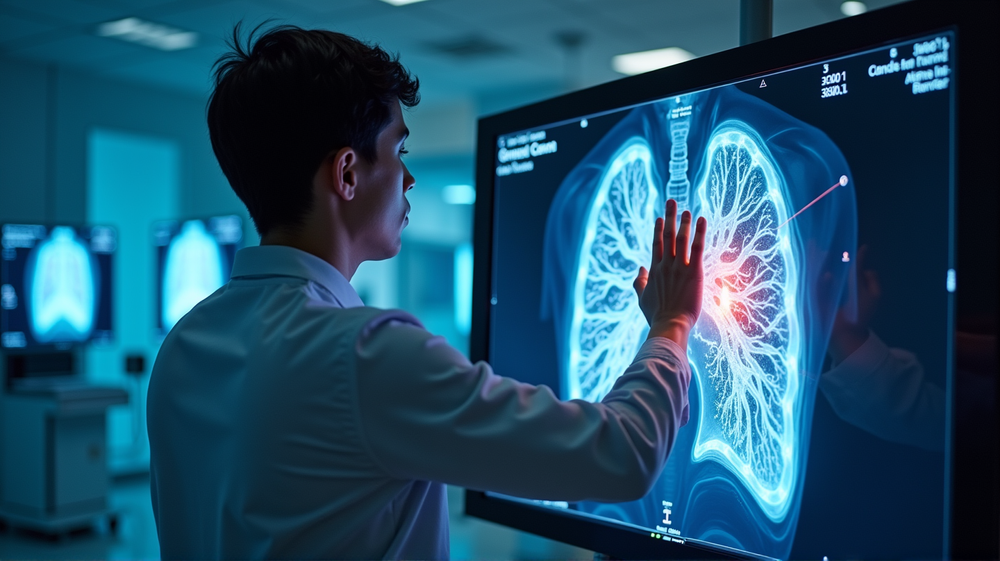

Lung cancer remains a formidable global health challenge, claiming 1.8 million lives each year. The key to combating this disease lies in early detection. Traditional methods, relying on subjective CT image analysis, often lead to late-stage diagnosis, limiting treatment options and survival rates. Herein lies the promise of our novel approach—a custom convolutional neural network (CNN) enhanced with explainable AI techniques, particularly gradient-weighted class activation mapping (Grad-CAM).

Understanding the Need for Explainable AI

Traditional methods are typically time-consuming and error-prone due to their reliance on human judgement. Our research aims to revolutionize this by offering a reliable classification system that distinguishes between different types of lung cancer—squamous cell carcinoma, large cell carcinoma, and adenocarcinoma. For physicians, having a system that provides transparency and interpretability is crucial for aligning AI outputs with clinical decision-making.

Achieving Unprecedented Accuracy

Our model, trained on a robust dataset of CT images, boasts an impressive overall accuracy of 93.06%. This high level of precision demonstrates the CNN’s strength in identifying even the most subtle malignancies, complementing the quantitative prowess with state-of-the-art explainability features. According to Nature, these advancements lead to higher survival rates and an improved prognosis for lung cancer patients by enabling earlier interventions.

The Role of Explainability in Clinical Application

Explainable AI plays a decisive role in clinical settings by facilitating trust. Grad-CAM, for instance, allows clinicians to visualize which parts of a CT image influenced the AI’s decisions, enhancing the model’s transparency. This integration of interpretability is pivotal in fostering confidence among medical professionals and ensuring patient safety.

Bridging Technological Gaps in Cancer Detection

As stated in Nature, ongoing research addresses significant challenges, such as the “black-box” perception of AI models. Our methodology circumvents this by providing actionable insights that are visually demonstrable, bridging the technological gap between machine learning advancements and practical clinical application.

Conclusion: Towards a Future of Smarter Diagnostics

By seamlessly merging high diagnostic accuracy with interpretability, our research sets a benchmark in medical imaging. The implications for public health are vast, potentially revolutionizing screening programs and supporting clinicians in making informed treatment decisions. Together with source advancements, we aim for a future where early detection of lung cancer is both a standard and an expectation, significantly ameliorating patient outcomes.

For more in-depth insights and access to the full dataset used in this study, visit Nature. Our research paves the way for continued exploration and adoption of AI in healthcare, reversing trends, and reducing the burden of lung cancer globally.