YouTube is taking measures to address the rise of AI-generated content and deepfakes on its platform. In an effort to maintain transparency and protect its community, the video-sharing platform plans to implement several changes in the coming months.

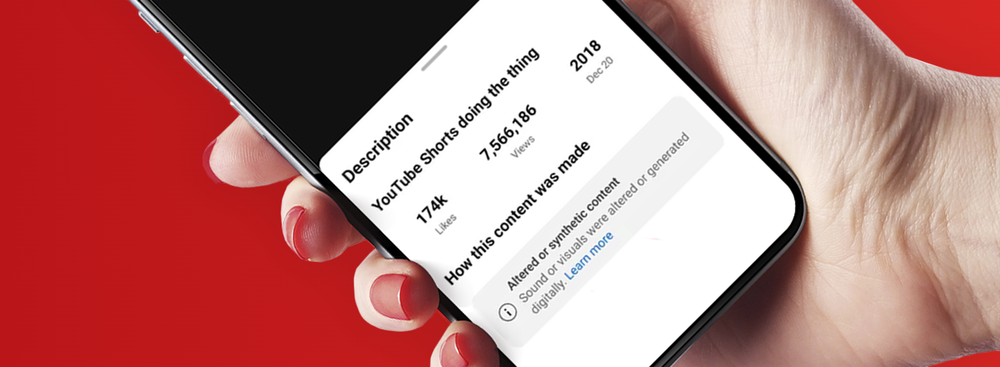

One significant change involves the labeling of AI-generated content. Creators will be required to disclose when they use artificial intelligence to produce "realistic" content, including videos depicting events that never occurred or individuals engaging in actions they didn't undertake. These disclosures will appear in the video's description and on the video player itself, especially for sensitive topics such as elections or public health crises.

Failure to accurately label AI-generated content may result in penalties, including content removal, suspension from the YouTube Partner Program, or other sanctions. YouTube aims to work closely with creators to ensure they understand and comply with these new requirements. However, determining what constitutes "realistic" AI-generated content remains a challenge, and YouTube is working on providing more specific guidance and examples.

The platform also plans to enable viewers to request the removal of videos that simulate an identifiable individual, including their face or voice. This process will involve evaluating various factors, such as whether the content qualifies as parody or satire or whether the individual is a public figure.

These changes come in response to the growing use of AI-generated content, including deepfakes, and aim to strike a balance between fostering creativity and maintaining community safety on the platform. YouTube emphasizes that it will continue to refine its approach and work with creators, artists, and other stakeholders to create a responsible and innovative environment.