Meta, the parent company of Facebook and Instagram, has announced new measures to enhance the safety of teenagers using its platforms. These changes include tighter restrictions on messaging and improved parental controls aimed at safeguarding minors from unwanted interactions online.

Previously, Instagram prohibited adults over the age of 18 from sending messages to teenagers who weren't following them. Now, Meta is extending this restriction to all users under 16, and in certain regions, under 18, by default.

Big news! 🎉 We’re rolling out exciting new updates on Facebook and Instagram designed to help keep teens safe online.

— Meta (@Meta) January 25, 2024

🔒 New private messaging defaults for teens

✅ Enhanced parental supervision features

Get all the details here 👉 https://t.co/J4Oc0esAGT

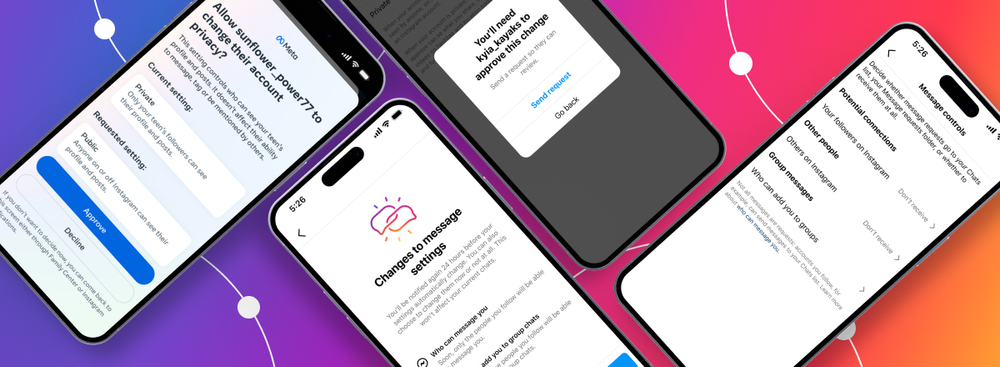

Teens under the age of 16 or 18 will no longer be able to receive messages from users they don't follow. Minors will only receive messages from individuals they follow or are connected with on Instagram and Messenger. Any attempts to change these settings will require approval from a parent or guardian.

In addition to these messaging limitations, Meta is introducing enhancements to its parental supervision tools. Parents will now have the authority to approve or deny changes in default privacy settings made by their teenage children. Previously, parents would receive notifications about these changes but had limited control over them.

Furthermore, Meta is developing a feature to prevent teenagers from viewing inappropriate content in their direct messages, with plans for it to work in encrypted chats as well. While the specifics of this feature are yet to be disclosed, Meta aims to discourage the sharing of such content among young users.

These updates come amidst growing concerns about online safety and the protection of minors on social media platforms. However, the company faces ongoing scrutiny from regulators and legal challenges related to child safety and harmful content on its platforms.